Agents in Artificial Intelligence with Types, Compare between them and how they work

Type of Agent:

Simple Reflex Agents: These agents make decisions based on the current state of the environment and predefined rules without considering the past or future.

Reflex Agents with State: These agents consider both the current state and past experiences to make decisions, allowing them to have a limited form of memory.

Goal-Based Agents: These agents have specific goals they aim to achieve. They make plans and take action to reach those goals.

Utility-Based Agents: These agents assess the desirability or "utility" of different actions or outcomes and choose the one that maximizes their satisfaction or reward.

Learning Agents: Learning agents adapt and improve over time by acquiring knowledge and experience from their interactions with the environment.

How Agent works:

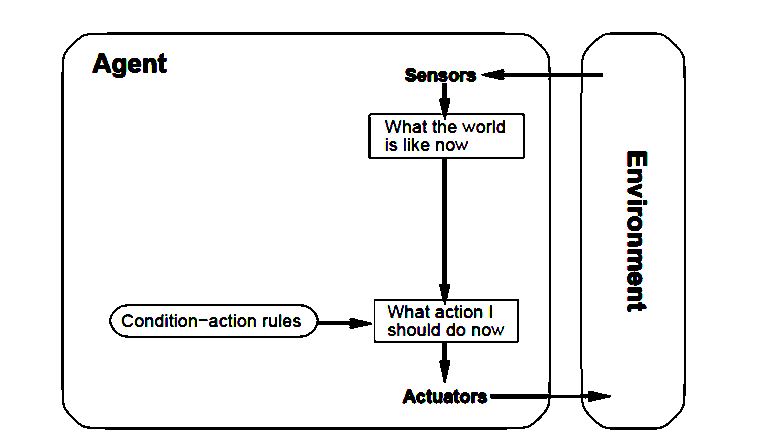

Simple Reflex Agents:

Simple Reflex Agents are like basic robots that make decisions based on what they see right now. They don't think about the past or the future. Here's how they work:

Perception: These agents use sensors to see what's happening in the environment right at this moment. It's like their eyes and ears.

Rules: They have simple rules or instructions that say, "If you see this, do that." For example, "If you see a red light, stop."

Action: When they sense something, they take immediate action based on their rules. It's like a reflex. If they see a red light, they stop the car.

Example: Think of a vending machine. It's a simple reflex agent. When you put money in, it checks which button you press. If you press "Coke," it gives you a Coke. It doesn't think about anything else, just the current action based on what you choose.

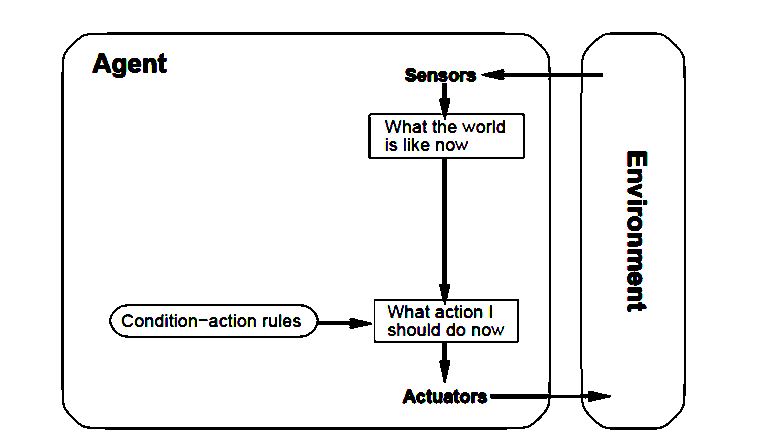

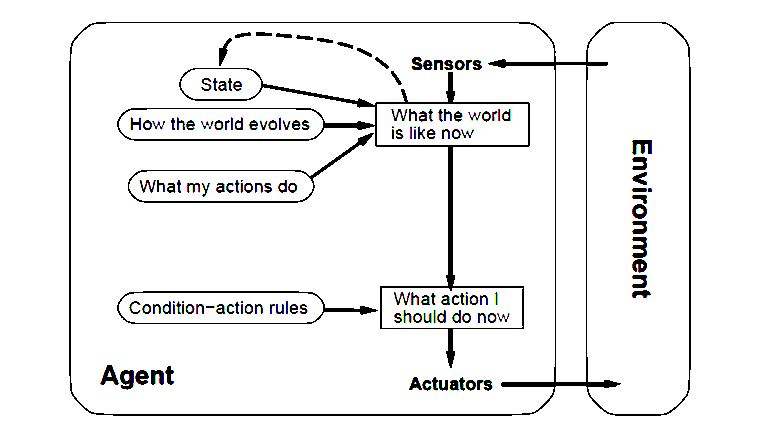

Reflex Agents with State:

Reflex Agents with State are like robots that consider not only what they see now but also what they've seen before. They have a "memory" to help them make better decisions. Here's how they work:

Perception: Just like reflex agents, they use sensors to see what's happening right now.

Rules and Memory: They have rules and a memory that remembers what they've seen in the past. Their rules might say, "If you see this and remember that, do this."

Action: When they sense something, they also check their memory to see if they've seen a similar situation before. Then, they take action based on both their current perception and what they remember.

Example: Think of a pet that has learned to sit when you say, "Sit." It remembers the command from the past (its state) and responds to it in the current situation. It doesn't just react to the word "Sit"; it uses what it remembers from before to know what to do.

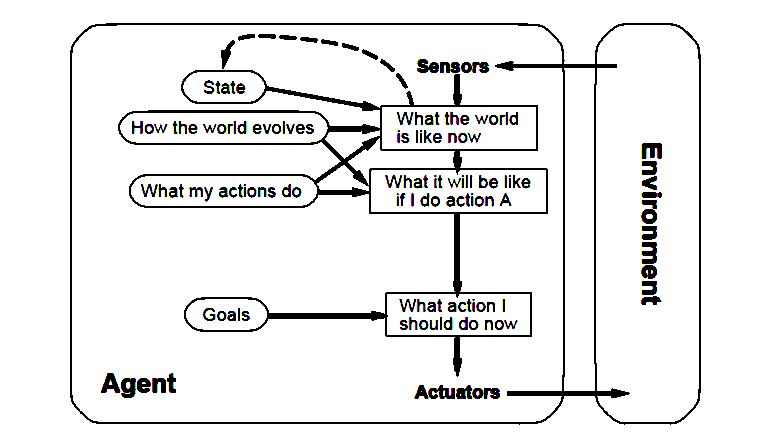

Goal-based Agents:

Goal-based Agents are like students with a clear goal in mind. They work to achieve that goal by making plans and taking steps toward it. Here's how they work:

Goal Setting: They start by setting a goal, which is like deciding what they want to achieve.

Planning: They make a plan, which is like creating a roadmap to reach their goal. It includes steps or actions to take.

Decision Making: They constantly make decisions to choose the best actions that will lead them closer to their goal. It's like deciding which path to take on the roadmap.

Example: Imagine a student with a goal to get good grades. They set the goal, create a study plan (the roadmap), and make decisions like studying, attending classes, and doing homework to achieve that goal. They always keep their goal in mind to guide their actions.

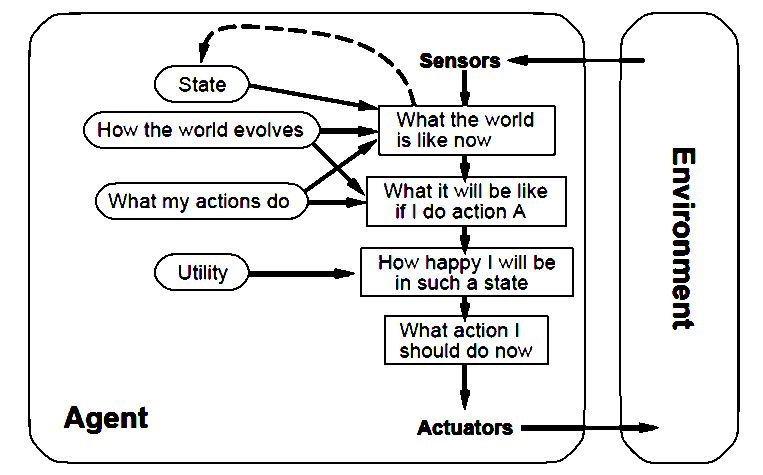

Utility-based Agents:

Utility-based Agents are like students who make choices based on what will give them the most satisfaction or reward. They aim to maximize their happiness. Here's how they work:

Assigning Values: They assign values or "happiness points" to different choices or outcomes. The higher the value, the happier it makes them.

Evaluation: When faced with a decision, they evaluate the options based on the happiness points each choice will bring.

Choice: They choose the option that gives them the most happiness points, as it's like picking the most rewarding path.

Example: Think of a student deciding what to do in the evening. They assign values to choices: studying might be 5 points, watching a favorite TV show 8 points, and going out with friends 10 points. They choose to go out with friends because it gives them the most happiness points.

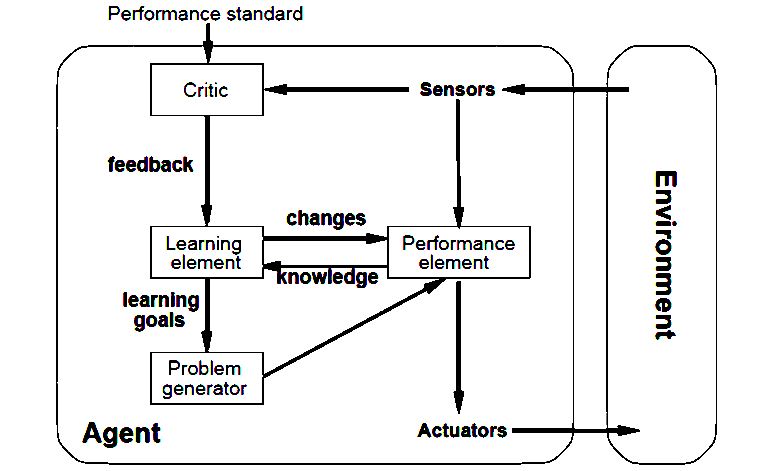

Learning Agents:

Learning Agents are like students who improve by studying and gaining experience. They get better over time. Here's how they work:

Learning: They learn from their experiences and mistakes, just like students learn from their studies and practice.

Adaptation: They change their actions based on what they've learned. If something didn't work before, they try a different approach next time.

Improvement: With each new experience, they get better at making decisions, just as students get better at subjects through practice.

Example: Think of a student who initially struggles with math. They keep practicing, learn from their mistakes, and gradually improve their math skills. Learning agents do something similar to the tasks they're designed for.

Different between Simple Reflex Agents, Reflex Agents with State, Goal-based Agents, Utility-based Agents, and Learning Agents.

| |

Simple Reflex Agents |

Reflex Agents with State |

Goal-based Agents |

Utility-based Agents |

Learning Agents |

| Decision-Making Process |

Follow rules based on what they see |

Use history and what they see. |

Follow a plan to reach a goal. |

Choose what's best for success. |

Get better with experience. |

| Applicability |

Good for easy places with clear rules. |

Useful when things are a bit hidden, and history is important |

Work well where you need a plan to reach goals. |

Great when goals clash or aren't just yes/no. |

Can handle many places but need to learn from experience |

| Flexibility |

Not very flexible, follow strict rules. |

More flexible than simple ones but still rule-bound. |

Very flexible, can adapt to different goals and changing situations |

Flexible, can balance multiple goals and trade-offs. |

Extremely flexible, adapt, and improve in different situations by learning. |

| Handling |

Not great with uncertainty, struggle with hidden things. |

Handle uncertainty better but still have limits. |

Good at planning even when things are uncertain |

Deal with uncertainty by thinking about what's most useful. |

Get better in uncertain or changing situations by learning. |

| Efficiency |

Super quick because they have strict rules. |

Pretty fast, but they need to remember a bit |

Not as quick as reflex agents, but they can handle harder jobs. |

Speed depends on how complicated the math is. |

Start slower, but get faster as they learn. |

| Adaptability |

Not good at changing, need manual fixes for updates. |

Can adapt a bit using their memory |

Excellent at adapting to different goals and places. |

Can adjust to new values and goals. |

Super adaptable, get better all on their own |